[hgallery3 id=”10124″ height=”231″ width=”320]

|

||||

|

LAN: eth0: 192.168.0.1/24 IP1: eth1: 192.168.1.1/24, gateway: 192.168.1.2/24 IP2: eth2: 192.168.2.1/24, gateway: 192.168.2.2/24 So here is how I would do by using iptables method: Route tablesFirst edit the /etc/iproute2/rt_tables to add a map between route table numbers and ISP names So table 10 and 20 is for ISP1 and ISP2, respectively. I need to populate these tables with routes from main table with this code snippet (which I have taken from hxxp://linux-ip.net/html/adv-multi-internet.html) And add default gateway to ISP1 through that ISP1’s gateway: Do the same for IP2 So now I have 2 route tables, 1 for each IP. IptablesOK now I use iptables to evenly distribute packets to each route tables. More info on how this work can be found here (http://www.diegolima.org/wordpress/?p=36) and here (http://home.regit.org/?page%5Fid=7) NATWell NAT is easy: [hgallery3 id=”9122″ height=”231″ width=”320] http://www.refmanual.com/2012/11/16/measure-unix-iops/

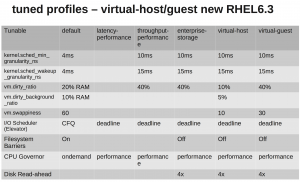

1. 2. 3. See the tables below : Profile explanation : default latency-performance throughput-performance enterprise-storage virtual-guest virtual-host [hgallery3 id=”9058″ height=”231″ width=”320] 1.Get signed server certificate for auth 2. edit sendmail.mc as below : (port 587 is listened by default, no need to add in the mc) define(`confAUTH_OPTIONS', `A p y')dnl TRUST_AUTH_MECH(`LOGIN PLAIN')dnl define(`confAUTH_MECHANISMS', `LOGIN PLAIN')dnl define(`confCACERT_PATH',`/etc/pki/tls/certs') define(`confCACERT',`/etc/pki/tls/certs/gd_bundle.crt') define(`confSERVER_CERT',`/etc/pki/tls/certs/server.crt') define(`confSERVER_KEY',`/etc/pki/tls/certs/server.key') DAEMON_OPTIONS(`Port=465,Addr=0.0.0.0, Name=MTA') 3. install saslauthd, make sure the below are installed : cyrus-sasl-plain 4. Check /etc/sysconfig/saslauthd, should be as below : MECH=pam # these two settings are the defaults SOCKETDIR=/var/run/saslauthd FLAGS= 5. check /etc/sasl2/Sendmail.conf, should be as below : pwcheck_method:saslauthd 6. service saslauthd restart; service sendmail restart 7. Use the command : openssl s_client -starttls smtp -connect localhost:587 and then enter EHLO localhost

250-testhost.ie.cuhk.edu.hk Hello localhost [127.0.0.1], pleased to meet you

250-ENHANCEDSTATUSCODES

250-PIPELINING

250-EXPN

250-VERB

250-8BITMIME

250-SIZE 200000000

250-DSN

250-ETRN

250-AUTH LOGIN PLAIN

250-DELIVERBY

250 HELP

|

||||