You can use MiniTool Partiont Wizard Home Edition

|

||||

|

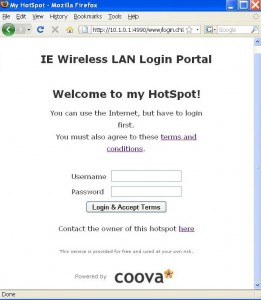

Use the command todos & fromdos for the conversion >esxcfg-vswitch -m 9000 vSwitch0 >excfg-vswitch -l http://blog.scottlowe.org/2009/06/23/new-user-networking-config-guide/ Add the -o resvport as the mount option because Linux server will refuse NFS requests coming from a non-reserved (<1024) source port How To: Network / TCP / UDP Tuning 1. Make sure that you have root privleges. 2. Type: sysctl -p | grep mem 3. Type: sysctl -w net.core.rmem_max=8388608 4. Type: sysctl -w net.core.wmem_max=8388608 5. Type: sysctl -w net.core.rmem_default=65536 6. Type: sysctl -w net.core.wmem_default=65536 7. Type: sysctl -w net.ipv4.tcp_mem=’8388608 8388608 8388608′ 8. Type: sysctl -w net.ipv4.tcp_rmem=’4096 87380 8388608′ 9. Type: sysctl -w net.ipv4.tcp_wmem=’4096 65536 8388608′ 10. Type:sysctl -w net.ipv4.route.flush=1 Quick Step sysctl -w net.core.rmem_max=8388608 Another set of parameters suggested from VMWare Communities : I have set this socket option as one of the performance tuning configuration on my production samba server long ago in the 100M/bit network era. Many later configured samba servers inherited this configuration naturally. However when I test the samba performance of my latest configured Dell PowerEdge Data server, the result is astonishing. Its throughput is only around~12 to 15 MB/s. Even a cheap latest NAS can have samba performance approach 80 to 90MB/s easily on a gigabit network. I noticed there should be something wrong in samba config on the Dell Server. After a quick search, I have found the read and write buffer setting of socket options in smb.conf : BTW, I think I can use this option to SLOW DOWN the samba throughput of modern server if the samba consumed too much resources. Remember not to install the kvm kernel modules, otherwise you will get the error : failed to initialize monitor device when start a vm under VMware Server 2.0. BTW, you also need to follow instructions in this page to make VMware Server 2.0 work properly under CentOS 5.5. As a result, it seems that I can’t run the kvm based vms and vmware server vms side by side. I used chillispot as the wireless LAN captive portal for long time. It is one of the best FREE captive portal. Though it is quite simple and with less optimal performance, I used for our department’s Wireless LAN login. However, it is defunc now. The successor of it is coovachilli but the documentation of it is ….really NONE. Much of the config options need to be read from the src (conf/functions). Anyway I have tried it and at least found the my most needed features in it (Command to get user connection information and a good-looking miniportal Page). There’s no minportal sample in the stable source. I have to use SVN to get it. In short you should use SVN to get source, compile it with openssl enabled to enable https login page. 2. The options are not fully documented in the default config file, view the functions file to get a full list. Eg : the |

||||