On-the-fly Feature Importance Mining for Person Re-Identification PR'12 Re-ID ECCV'12

Introduction

State-of-the-art person re-identification methods seek robust person matching through combining various feature types. Often, these features are implicitly assigned with generic weights, which are assumed to be universally and equally good for all individuals, independent to people’s different appearances. In this study, we show that certain features play more important role than others under different viewing conditions. To explore this characteristic, we propose a novel unsupervised approach to bottom-up feature importance mining on-the-fly specific to each re-identification probe target image, so features extracted from different individuals are weighted adaptively driven by their salient and inherent appearance attributes. Extensive experiments on three public datasets give insights on how feature importance can vary depending on both the viewing condition and specific person’s appearance, and demonstrate that unsupervised bottom-up feature importance mining specific to each probe image can facilitate more accurate re-identification especially when it is combined with generic universal weights obtained using existing distance metric learning methods.

Contribution Highlights

- While most existing person re-identification methods focus on supervised top-down feature importance learning, we provide empirical evidence to support the view that some benefits can be gained from unsupervised bottom-up feature importance mining guided by a person’s appearance attribute classification. To our best knowledge, this is the first study that systematically investigates the role of different feature types in relation to appearance attributes for person re-identification.

- We formulate a novel unsupervised approach for on-the-fly mining of person appearance attribute-specific feature importance. Specifically, we introduce the concept of learning grouping of appearance attributes for guiding bottom-up feature importance mining. Moreover, we define an error gain based criterion to systematically quantify feature importance for the process of re-identification of each specific probe image.

Citation

-

On-the-fly Feature Importance Mining for Person Re-Identification

C. Liu, S. Gong, and C. C. Loy

Pattern Recognition, vol. 47, no. 4, pp. 1602-1615, 2014 (PR)

DOI PDF -

Person Re-Identification: What Features are Important?

C. Liu, S. Gong, C. C. Loy, and X. Lin

in Proceedings of European Conference on Computer Vision, International Workshop on Re-Identification, pp. 391-401, 2012 (Re-Id @ ECCV)

PDF

Images

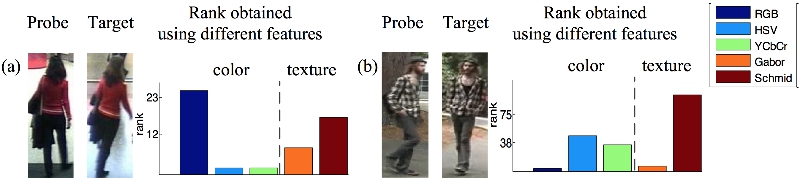

Certain appearance features can be more important than others in describing an individual and distinguishing him/her from other people. For instance, colour is more informative to describe and distinguish an individual wearing textureless bright red shirt, but texture information can be equally or more critical for a person wearing plaid shirt.

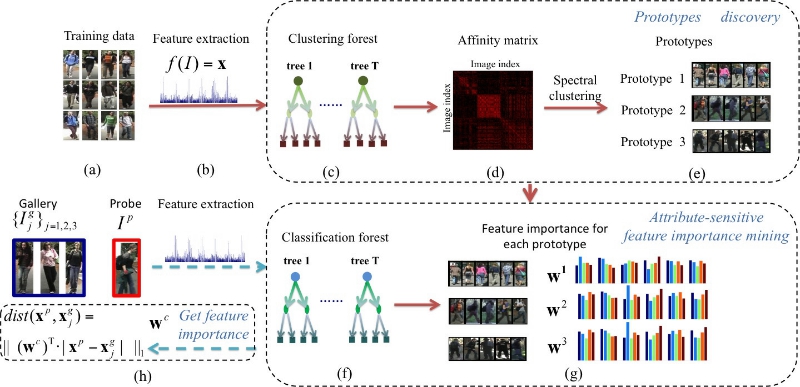

Overview of attribute-sensitive feature importance mining. Training steps are indicated by red solid arrows and testing steps are denoted by blue slash arrows. The three main steps are: (1) discovering prototypes by a clustering forest; (2) attribute-sensitive feature importance mining; (3) determining the feature importance of a probe image on-the-fly.

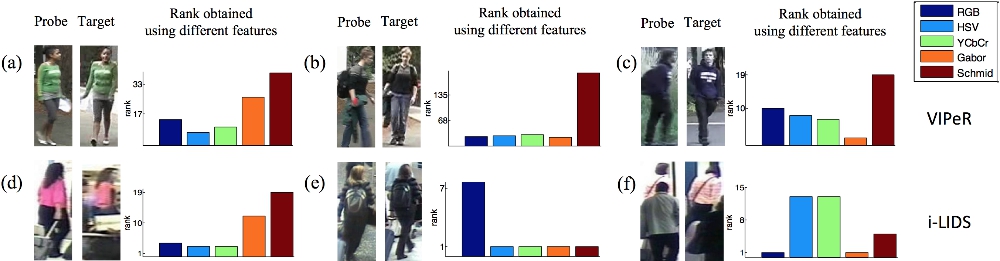

In each subfigure, we show the probe image and the target image, together with the rank of correct matching by using different feature types separately. It is observed that no single feature type was able to constantly outperform the others.

The results suggest that the overall matching performance can potentially be boosted by weighting features selectively according to the inherent appearance attributes.

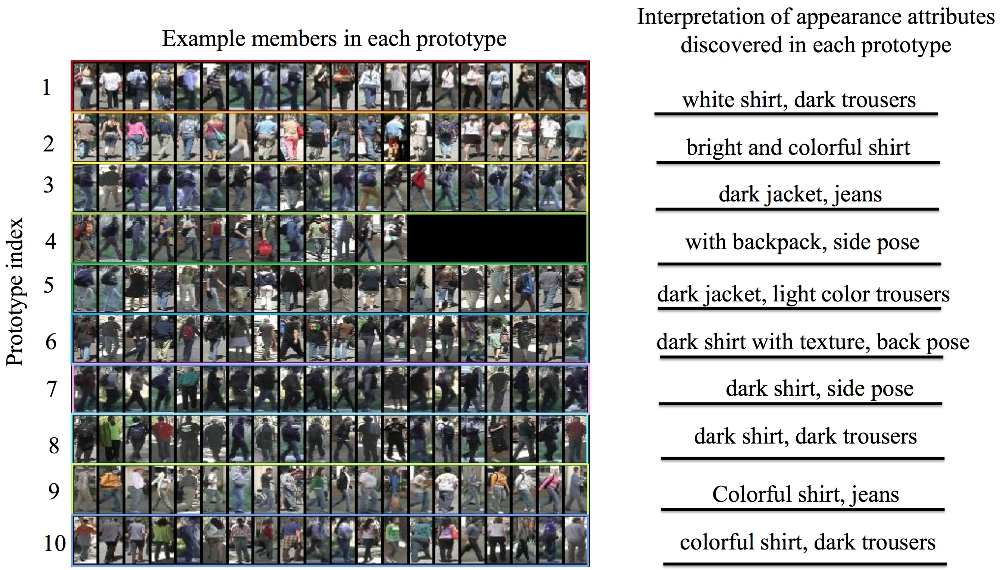

Each prototype represents a low-dimensional manifold cluster that models similar appearance attributes. Each image row in the figure shows a few examples of images in a particular prototype, with their interpreted unsupervised attributes listed on the right.

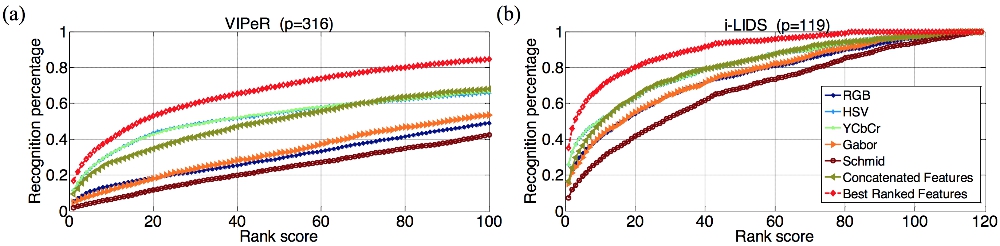

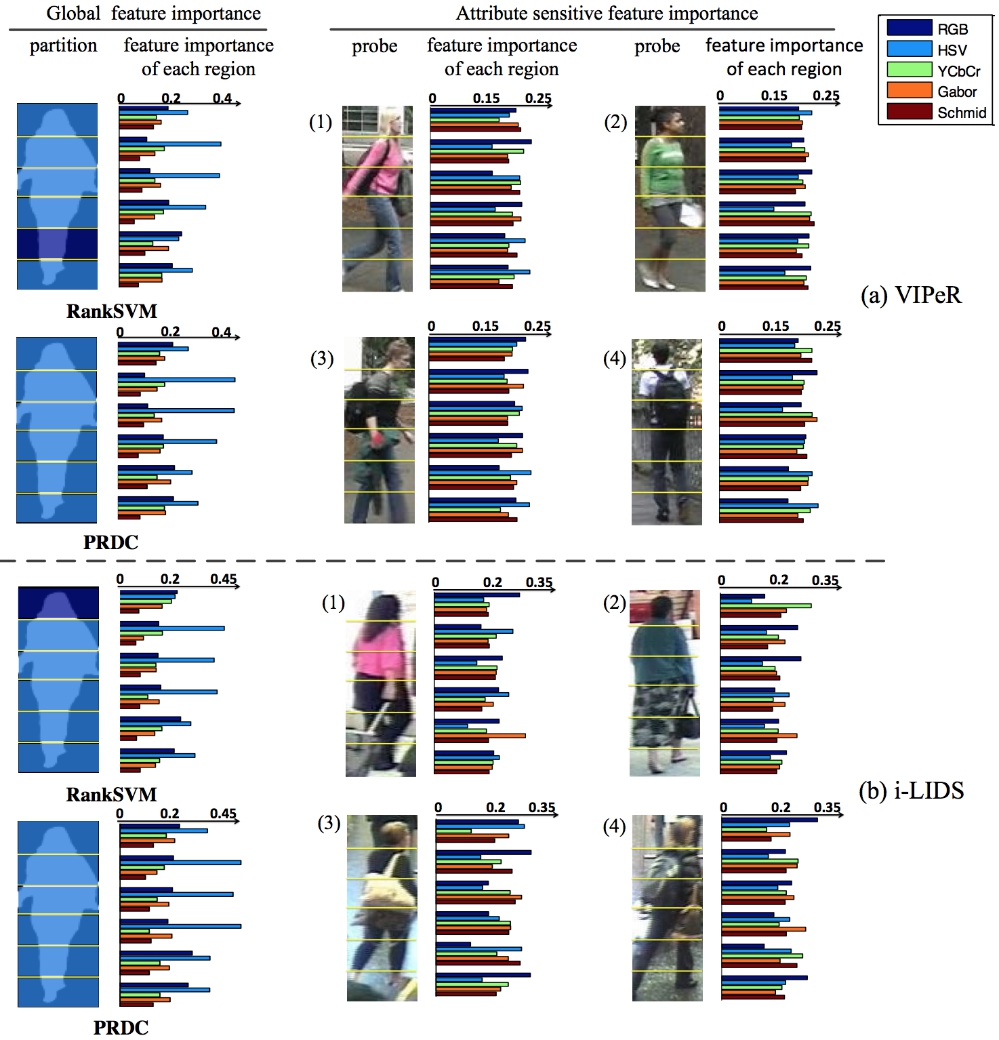

In general, the global feature importance emphasises more on the colour features for all the regions, whereas the texture features are assigned higher weights in the leg region than the torso region. This weight assignment or importance is applied universally to all images. In contrast, the attribute-sensitive feature importance are more person-specific.